Why ChatGPT’s New Memory Might Be the Most Underrated AI Breakthrough Yet

It’s not about bigger models anymore—it’s about the AI that knows you best. Memory is the moat, and this upgrade changes everything.

Good morning AI entrepreneurs & enthusiasts,

OpenAI just gave ChatGPT the biggest boost since GPT-4: persistent memory. Sam Altman said he couldn’t sleep before launch—because he knew memory would become the moat.

Why? Because the more you use GPT, the more it knows you. It now builds a personal operating model around your preferences, behaviors, and patterns—at work and in life.

For most users, the size of the model doesn’t matter. What matters is how well the AI integrates into your world—and memory is the unlock.

This isn’t just about smarter responses. It’s about a smarter you, amplified by an AI that remembers what matters.

In today’s AI news:

ChatGPT’s Memory Upgrade

Mira Murati’s New Venture Chasing Historic Seed Round

Debugging: AI’s Blind Spot Exposed

Higgsfield Mix: Vibe Marketing’s New Visual Engine

Today's Top Tools & Quick News

ChatGPT’s Memory Upgrade

The News: OpenAI has rolled out a dual-layered memory upgrade to ChatGPT, combining explicitly saved memories and automatic chat history insights to deliver a far more personalized AI experience.

The Details:

ChatGPT now automatically absorbs your communication style, project context, and preferences during regular use.

You can review, edit, or delete memories anytime—or use Temporary Chat for privacy-first sessions.

The system stores approximately 1,200–1,400 words and may require periodic pruning when full.

Currently live for Pro/Plus users, with Enterprise, Edu, and Team plans to follow; not available yet in EU/UK/EEA due to compliance.

Why it matters: AI's ability to store automatically store user memory may be one of the most underrated features of the user experience. With this update, every single ChatGPT interaction deepens the memory moat - personalizing your experience even further. While impactful for users with focused use cases spanning across a variety of domains, this upgrade also raises important ethical questions about data control and transparency. It's important to remember that you can disable this feature - keeping you in the driver seat.

Mira Murati’s New Venture Chasing Historic Seed Round

The News: Former OpenAI CTO Mira Murati is spearheading Thinking Machines Lab, a stealth-mode AI startup now seeking one of the largest early-stage rounds in industry history.

The Details:

Initially targeting ~$1B at a $9B valuation, the startup now seeks over $2B at a $10B+ valuation—doubling expectations.

Two-thirds of the ~30-person team are OpenAI veterans, including John Schulman, Barret Zoph, Bob McGrew, and Alec Radford.

With no public product yet, but the company focuses on modular AI systems for use in manufacturing, life sciences, and human-AI collaboration.

Why it matters: The investor race around talent-first, pre-product AI startups like Murati’s—and Ilya Sutskever’s SSI—signals a clear market tilt toward proven operators. Murati’s ability to attract elite AI talent post-OpenAI reinforces her credibility as a leader. The $2B+ ask also underscores just how high the cost of frontier AI development has climbed—compute, research, and retention are now billion-dollar bets.

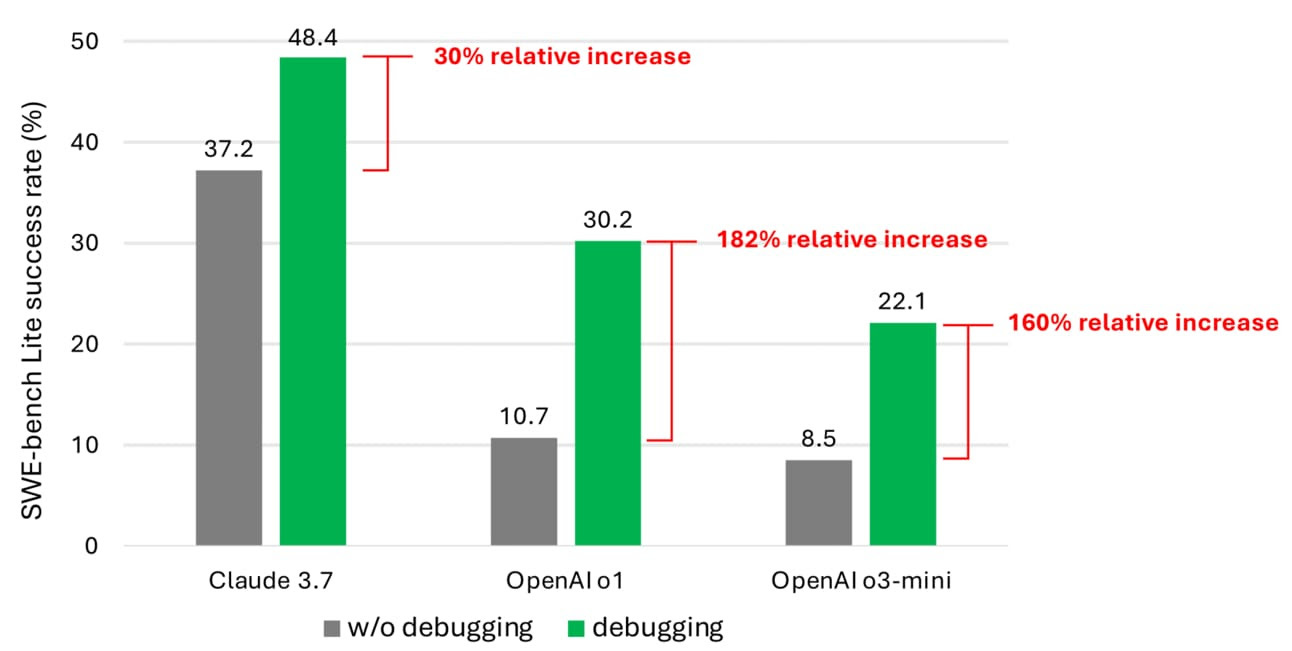

Debugging: AI’s Blind Spot Exposed

The News: Microsoft’s recent study highlights the limitations of current AI systems in performing debugging tasks that human engineers typically handle with ease.

The Details:

In SWE-bench Lite tests, Claude 3.7 Sonnet solved 48.4% of 300 issues, while OpenAI’s o1 and o3-mini models scored 30.2% and 22.1% respectively.

The models struggled due to insufficient exposure to sequential debugging data during training.

OpenAI’s SWE-Lancer benchmark showed Claude 3.5 Sonnet achieving just 26.2% on implementation tasks across real-world Upwork challenges.

Microsoft has integrated agent-debugging into its 365 Copilot suite, aiming to ease troubleshooting for developers without relying on traditional IDEs.

Why it matters: These findings underscore AI’s ongoing limitations in multi-step reasoning and context-sensitive problem solving. While AI coding tools show immense promise in assisting with boilerplate generation or suggestions, they remain far from replacing human developers in nuanced debugging. The focus is shifting toward making AI a co-pilot—not a coder replacement—especially for mission-critical software work.

Higgsfield Mix: Vibe Marketing’s New Visual Engine

The News: Higgsfield AI’s newly launched Mix is a breakthrough cinematic engine tailor-made for AI-first content teams and vibe marketers. It’s not just redefining video creation—it’s setting a new creative standard for scroll culture, where emotional resonance, motion aesthetics, and narrative rhythm are everything.

The Details:

Mix allows creators to merge complex moves—like vertical dives and snorricam spins—into a single, fluid shot.

Execute advanced camera language like dolly zooms or overhead sweeps via text prompts, a major leap in intentionality for AI-generated video.

Plug-and-play camera effects ranging from tension-building slow pushes to chaotic boltcam drama—all without touching a rig.

Built on a fusion of diffusion modeling and reinforcement learning, it ensures cinematic consistency across every scene.

Why it matters: For vibe marketers, Mix may be one of the most valuable video generation tools we've seen yet. It democratizes professional-grade video production by removing the cost and breaking down the barrier to entry (high-end camera work and equipment). In a quickly growing market (video generation tools) Mix stands out as an intentional and powerful tool to achieve professional-grade production without the bloated budget or post-production pipelines.

TODAY'S TOP TOOLS

Gemini 2.5 Flash: Google’s fast, cost-efficient reasoning model with dynamic processing for real-time applications like support chat and document workflows.

Kimi-VL: Moonshot AI’s 2.8B MoE vision-language model that challenges giants like GPT-4o-mini, excelling at long-form multimodal reasoning and OCR.

Pika Twists: AI-powered video tool for scene editing via prompt-based object manipulation—ideal for creators aiming for viral visual storytelling.

askplexbot: Perplexity AI’s Telegram-based assistant offering real-time chat, coding, translation, and research in group conversations.

QUICK NEWS

Safe Superintelligence (SSI) partners with Google Cloud for TPU compute

Canva unveils Visual Suite 2.0 + AI voice-based creator

OpenAI sues Elon Musk for breach of agreement

OpenAI launches BrowseComp, a benchmark for AI browsing skills

ByteDance drops Seed-Thinking-v1.5, a 200B parameter reasoning model

xAI’s Grok-3 API now public, priced at $3–$15 per million tokens

Writer releases AI HQ—enterprise agent supervision suite

How do you see the future of memory? As we get larger and larger context windows do you foresee this improving over time?

Another day, another great AI news update! Keep it up!