OpenAI Just Released o3 — The Most Important Model Since GPT-3.5

Tool-using models, autonomous workflows, and the beginning of agentic infrastructure — what builders need to know now, not tomorrow.

This Is the Moment We’ve Been Waiting For

Just five hours ago, OpenAI unveiled what may become the defining inflection point in the next era of artificial intelligence: the launch of o3 and o4-mini.

It’s tempting to call this another incremental update. But that would miss the bigger shift unfolding behind the scenes. For the first time, we’re seeing a model not just built for intelligence — but for integration.

Normally, I would wait to announce this in tomorrow’s AI news - but when you have a model that is trained from the ground up to interact with the world through autonomous reasoning, tool use, and multimodal understanding - there’s no time to waste in the AI race.

What’s Actually Different This Time?

Since GPT-3.5, we’ve seen a parade of launches claiming to offer “better reasoning” and “multimodal input.” But the o3 release introduces something fundamentally different: tool-augmented intelligence.

The o3 and o4-mini models bring together three core capabilities:

Multimodal cognition: They can interpret images (including blurry whiteboards or textbook scans) and use them as part of their active reasoning loop.

Autonomous tool use: In one example, o3 deployed more than 600 tool calls in a single response — deciding when and how to use the web, Python, file analysis, image generation, and more.

Agentic architecture: These models don’t just execute a prompt. They run workflows.

This launch drops amid a rising wave of AI systemization: Anthropic’s Model Context Protocol (MCP), Google’s Agent-to-Agent (A2A), and now o3 — all aligned on one thesis:

Intelligence without action is incomplete.

OpenAI is… Open Sourcing Codex CLI?

While o3 grabbed headlines, the quiet release of Codex CLI might be just as disruptive.

It’s an open-source, terminal-native coding agent that can read, write, refactor, and execute code in your local environment. But more importantly, it operates in three distinct modes:

Suggest: AI proposes edits; you approve them.

Auto Edit: AI edits files directly; you approve terminal actions.

Full Auto: AI runs autonomously within a sandboxed directory.

All of this happens in a git-backed, auditable workspace, meaning every change can be tracked and rolled back — the kind of maturity developers have been waiting for. And yes, it supports multimodal inputs like screenshots and diagrams.

It’s the most developer-focused release OpenAI has made in years — and it addresses one of the loudest critiques of the company: a lack of open-source trust and local control.

"This isn’t just assistance. It’s agency — at the command line."

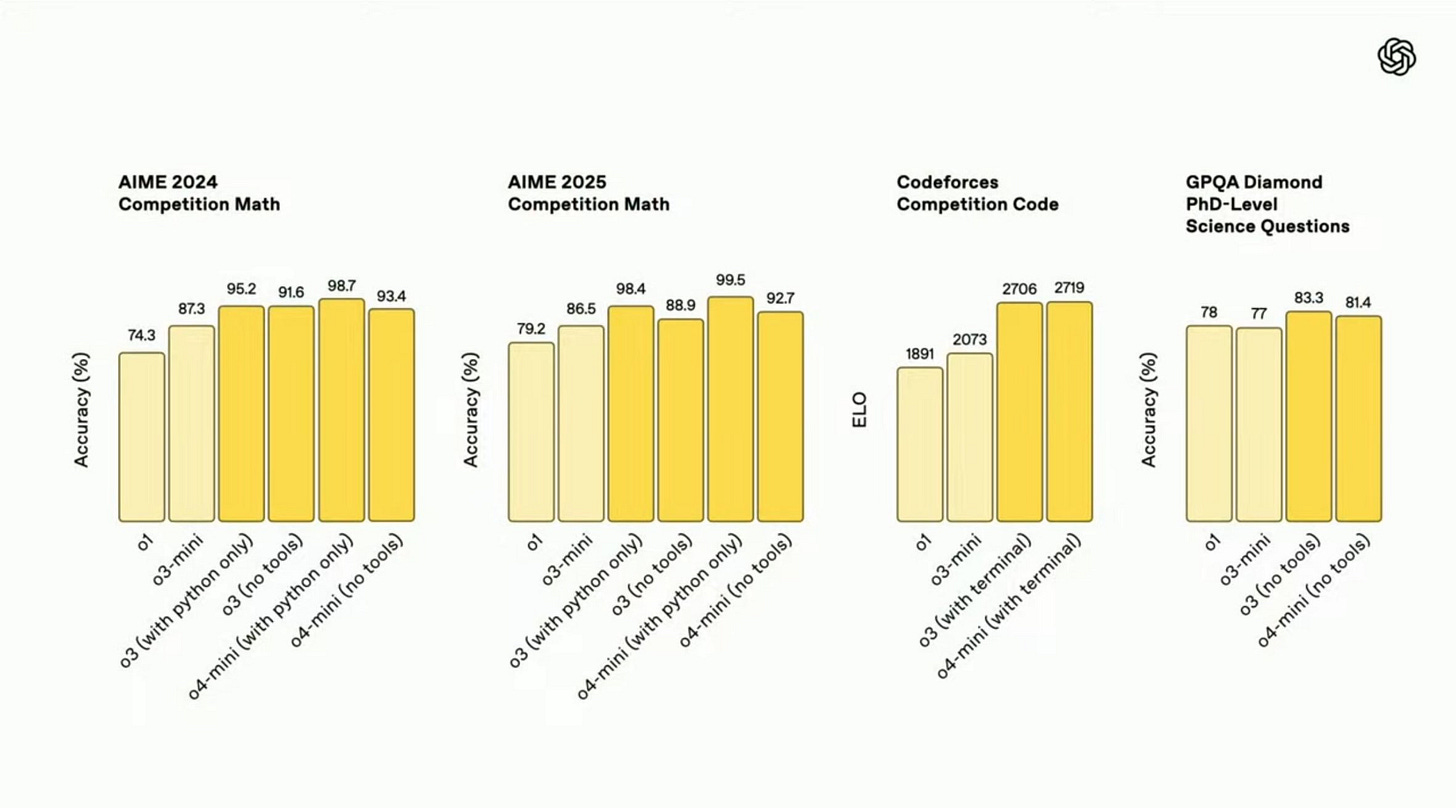

Behind the Benchmarks: The Growth Curve

If this feels like a step change, it’s because the numbers back it up. This is the model that will make us re-evaluate which benchmarks just became obsolete.

Since December of 2024:

SWE-bench Verified: 48.9% → 71.7% (+47%)

Codeforces Elo: 2300-range → 2727 (Top 0.2% of all human coders)

Frontier Math (Epoch AI): 12x performance gain

Token window: 2x

Output length: 3x

OpenAI trained o3 using both training-time and test-time compute, yielding smarter generalization under pressure. This is a major architectural choice that allows for smarter generalization under dynamic workloads.

But performance like this comes at a cost.

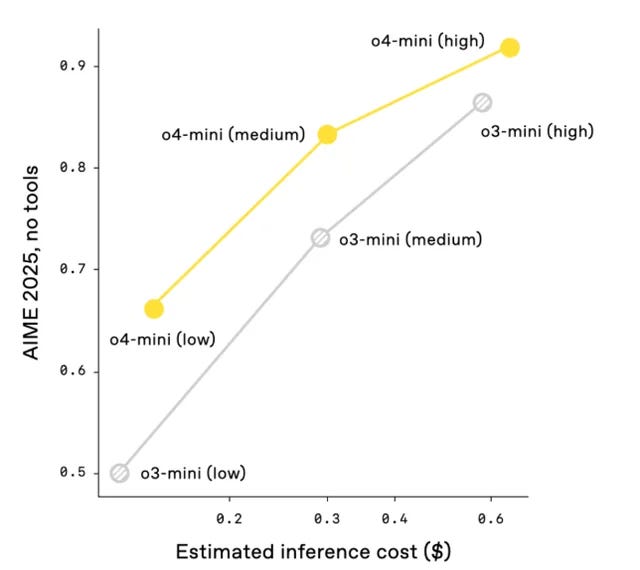

Let’s Talk Cost (Because It Matters)

The o3 model is positioned as a high-performance, high-precision tool — and its pricing reflects that. At $10 per million input tokens and $40 per million output tokens, it’s a significant jump from the norm. But that premium comes with unmatched capability for deep reasoning and autonomous tool use across complex workflows.

o4-mini, by contrast, comes in at $1.10 per million input tokens and $4.40 per million output tokens — roughly 10 times cheaper. It’s built for speed and scale, making it an ideal choice for high-volume use cases where cost-efficiency matters more than absolute precision.

Put simply:

Use o3 when accuracy is mission-critical — workflows with complex logic, tool chaining, and zero tolerance for hallucination.

Use o4-mini when you need fast, capable, multimodal reasoning that scales economically across thousands of tasks.

Yes — o3 is 70x more expensive than some open models like DeepSeek R1. But that’s not a bug — it’s a signal. It’s a model designed for scenarios where being wrong costs more than running the model.

If you’re building AI into a system that makes decisions, executes code, or drives revenue — this is where you start making stack-level decisions.

So how do you integrate it strategically?

How I’d Stack This If I Were Building Right Now

For founders, CTOs, and product teams, here’s my recommendation:

GPT-4o at the UX layer — for conversational interfaces, fast feedback, and low-cost multimodal chat

o4-mini for logic and infrastructure — ideal for high-volume reasoning and document-based tasks

o3 at the core — powering backend orchestration, agentic workflows, tool use, and critical thinking tasks

Codex CLI for local dev automation — letting engineers build and iterate on agents inside their own sandboxed environments

Treat your AI infrastructure like your cloud infrastructure — with a layered stack, not a single monolithic model. Orchestration is now a design decision.

The Age of Multimodal Reasoning

The o3 launch isn’t just about better models — it’s about a shift in what we expect from AI.

No longer just advisors or text predictors, these models are becoming operational systems that can analyze, decide, and act — all within one cognitive loop.

And Codex CLI? It’s the toolkit for building the first wave of local agents that can do real work.

The best part? This is just the beginning.

As founders, builders, and innovators — we now have access to systems-level intelligence that can execute with accuracy, adapt to complexity, and plug directly into how real work gets done.

The future of software isn't just faster. It's agentic. Multimodal. Intentional.

So the question isn’t if you’ll use these tools.

It’s how soon you’ll build with them.

And what you'll create when intelligence becomes infrastructure.

I like the articles from The Batch ,Deep Learning.AI by AndrewNG and now AJ Green has been belting out the hits.

Both are amazing reads.

Cheers