Microsoft Breaks Free From OpenAI

Microsoft is betting on model independence—by backing Grok, it’s signaling a future where OpenAI is optional, not essential.

Good morning AI entrepreneurs & enthusiasts,

Microsoft just detonated a bombshell in the AI world—inking a deal with Elon Musk’s xAI to bring Grok to Azure, and sending a clear signal: they’re not betting the house on OpenAI anymore.

With accusations of private access and skewed experiments possibly warping the results of a top benchmarking platform, the AI model evaluation scene just got a lot murkier.

In today’s AI news:

Microsoft to host xAI’s Grok on Azure

Microsoft Unveils Compact Reasoning Models

NVIDIA slams Anthropic’s US export stance

Study scrutinizes top AI benchmark

Top Tools & Quick News

MICROSOFT & GROK 🤝 Microsoft to host xAI’s Grok on Azure

The News: Microsoft is in advanced talks to host Elon Musk’s Grok model, developed by xAI, on Azure AI Foundry. This move will broaden Microsoft’s AI portfolio while reducing reliance on OpenAI.

The details:

Grok will be made available through Azure AI Foundry, Microsoft’s platform for building and managing generative AI apps.

Microsoft will only host the model—not provide infrastructure for training future Grok versions, according to Reuters.

The partnership lets Microsoft offer more model diversity beyond OpenAI, which currently powers services like Microsoft 365 Copilot.

Why it matters: Microsoft’s partnership with OpenAI just crossed a point of no return—OpenAI is now free to explore other cloud providers, and Microsoft responded with a calculated show of force. On the same day it launched its most powerful Phi reasoning models, it brought Elon Musk’s Grok into Azure—OpenAI’s most tumultuous competitor... this move fractures alliance over access, infrastructure, and accelerates Microsoft’s retreat from ChatGPT training. Bold move Microsoft, bold move.

MICROSOFT 🧠 Microsoft Unveils Compact Reasoning Models

The News: Microsoft introduced three new models from its Phi series, built for advanced reasoning in low-resource environments—and small enough to run on everyday devices.

The details:

Phi-4-reasoning (14B params) matches much larger models on benchmarks like DeepSeek’s 671B.

Phi-4-mini-reasoning (3.8B params) competes with 7B models on math tasks—and runs on phones.

Designed to power reasoning on edge devices and Copilot+ PCs.

Why it matters: Microsoft’s continued success with Phi shows how small models can punch above their weight. Beyond performance, this move reflects a broader strategic pivot: Microsoft is looking to reduce its dependency on OpenAI by diversifying its in-house AI offerings. These latest releases push us closer to everyday, high-performance AI—right from mobile or PC—and mark a key moment in Microsoft's evolution as a model-neutral AI powerhouse.

NVIDIA vs. ANTHROPIC 🚨 NVIDIA slams Anthropic’s US export stance

The News: NVIDIA has publicly criticized Anthropic’s endorsement of the USA’s Framework for Artificial Intelligence Diffusion, a policy that would enforce export controls on AI chips.

The details:

Anthropic, backed by Amazon, supports tighter enforcement, claiming China smuggles chips via “prosthetic baby bumps” and even “live lobsters.”

NVIDIA mocked these claims, calling them “tall tales” and suggested U.S. policy should emphasize innovation, not fear.

The company pointed out China’s significant AI talent pool, saying it already has about half the world’s AI researchers, and argued against overregulation.

NVIDIA previously warned that the new export controls could cost them $5.5 billion in lost revenue.

Why it matters: This is one of the biggest divides I’ve seen in AI—security vs. speed. Anthropic is lobbying for stricter controls, while NVIDIA says we’re handing China the edge if we clamp down too hard. This isn’t just policy—it’s going to impact everyone building with AI. Compute access could get tighter. Cross-border innovation might slow. May 15 could be the line in the sand that reshapes how we build, scale, and compete globally.

AI BENCHMARKING 🎯 Study scrutinizes top AI benchmark

The News: A new report by researchers from Cohere Labs, MIT, and Stanford claims LMArena—one of the most influential AI benchmarks—may unfairly benefit major tech firms, distorting its popular rankings.

The details:

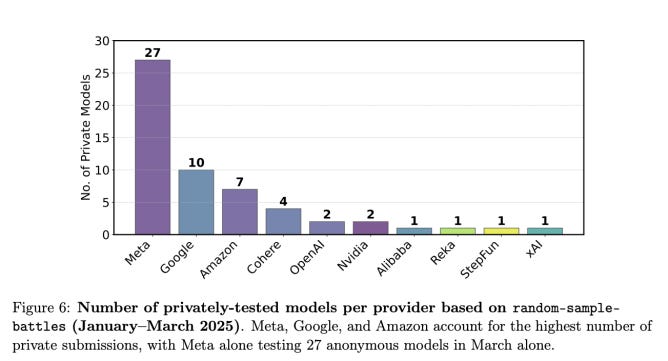

Big players like Google, Meta, and OpenAI allegedly run multiple model variations privately before submitting top results. Meta, for example, tested at least 27 versions of Llama 4 before releasing the top performer. A later "Maverick" variant revealed weaknesses in benchmark-specific tuning.

Arena data shows OpenAI and Google accounted for over 60% of all sampling interactions, offering them more exposure and data to optimize models specifically for Arena tasks.

Access to Arena data reportedly boosts model accuracy on Arena-specific tests by up to 112%, raising concerns of overfitting.

205 models were removed from the platform, with open-source entries phased out more frequently and fewer opportunities given for private testing or tuning.

Why it matters: LMArena disputes the findings, arguing the leaderboard mirrors user preferences and downplaying claims about data access bias. Still, the study casts doubt on benchmarking fairness. Following the Llama 4 Maverick controversy, it emphasizes the fragility of current AI evaluation systems.

TOP TOOLS:

🎥 Gen-4 References – AI video creation with character consistency

🎨 Gemini App – Native image editing added

🧠 MiMo-7B – Xiaomi’s open small-model with strong reasoning

📸 F-Lite – Freepik’s new open image generator

QUICK NEWS

Claude can now use external tools through Modular Capability Packs (MCPs), enabling integrations with apps like Zapier, Jira, Intercom, and more.

Google's AI Mode in Search is live for all U.S. Labs users, providing AI-generated summaries and direct answers using Gemini models.

Suno v4.5 introduces longer music tracks, improved genre support, enhanced prompting, and new audio features.

Google debuts 'Little Language Lessons' via Gemini for multilingual, real-life scenario-based language learning.

Thanks for reading this far! Stay ahead of the curve with my daily AI newsletter—bringing you the latest in AI news, innovation, and leadership every single day, 365 days a year. See you in the next edition!