Meet Meta’s Llama 4 Family: Scout, Maverick, Behemoth

3 New Models. 10M Tokens. Is Llama 4 the Open-Source King?

Good morning AI entrepreneurs and enthusiasts,

Meta has officially unveiled the Llama 4 model family in a surprise weekend drop—bringing with it new open-weight models featuring massive context windows and benchmark-shattering performance.

With the 2T "Behemoth" still undergoing training and early claims of outperforming GPT-4.5, the big question remains: is this the next leap forward? Or will real-world usage paint a different picture?

In today’s AI news:

Meta debuts Llama 4 family

Copilot receives major personalization update

OpenAI delays GPT-5, fast-tracks o3 and o4-mini

Top Tools & Quick News

META UNLEASHES LLAMA 4

The News: Meta released the Llama 4 family featuring multimodal support and industry-leading context lengths. Included are new open-weight models Scout and Maverick, plus a preview of the still-training 2T-parameter Behemoth.

The Details:

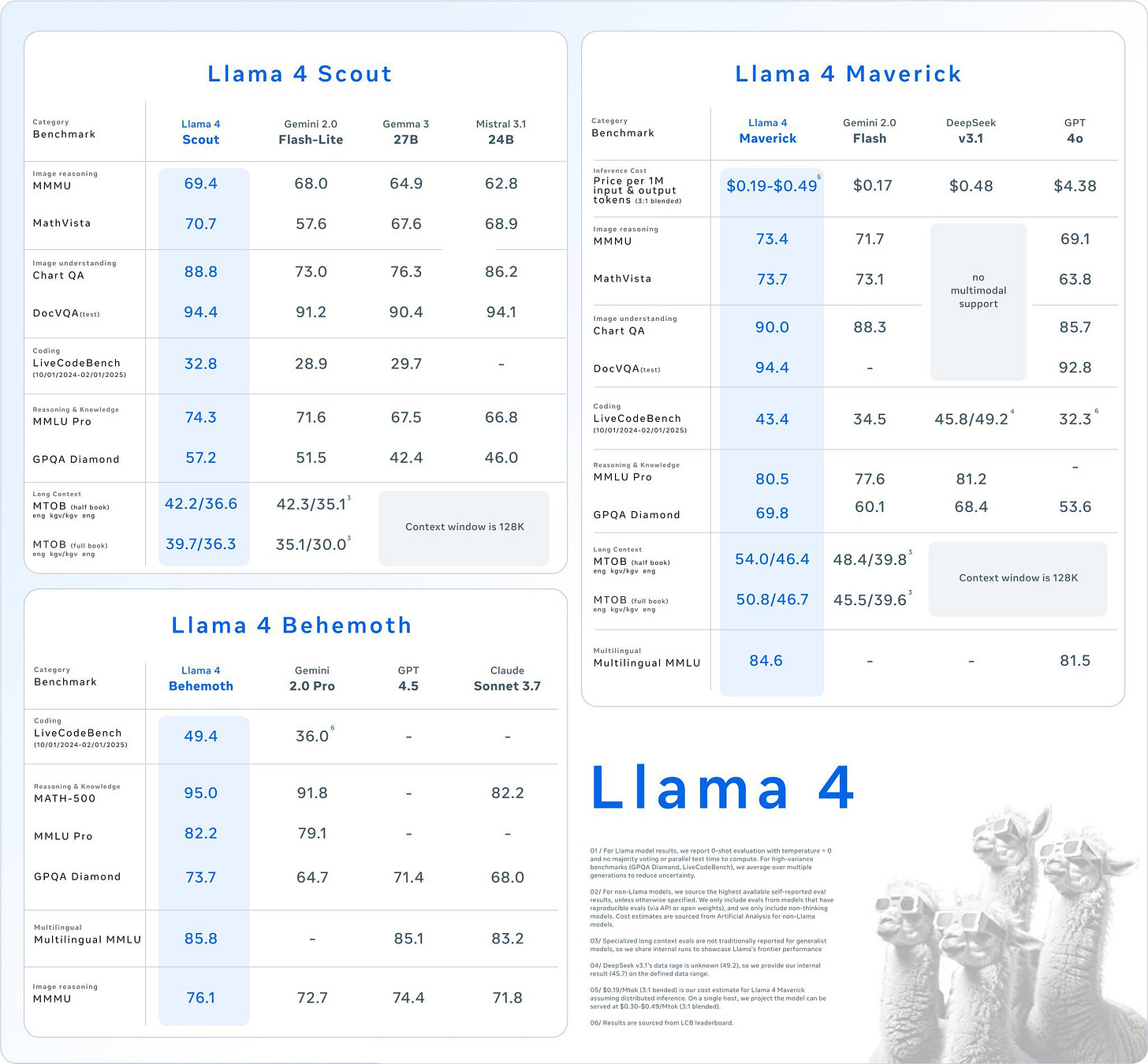

Scout, with 109B parameters and a 10M-token context window, can run on a single H100 GPU and surpasses Gemma 3 and Mistral 3 in benchmarks.

Maverick, at 400B parameters, supports a 1M-token context window and tops GPT-4o and Gemini 2.0 Flash on key benchmarks while keeping costs low.

Behemoth (2T parameters) is still being trained but is said to outperform GPT-4.5, Claude 3.7, and Gemini 2.0 Pro.

All models use a Mixture-of-Experts (MoE) architecture that activates specific experts per token, slashing compute and inference costs.

Scout and Maverick are available now and integrated across Meta AI experiences on WhatsApp, Messenger, and Instagram.

Why it matters: After DeepSeek R1 shook the open-source scene earlier this year, Meta needed to deliver. Llama 4 seems like a major leap in performance and efficiency—but as always, how it feels to real users will ultimately define its success.

MICROSOFT COPILOT JUST LEVELED UP

The News: Microsoft unveiled a sweeping Copilot update, adding memory, new vision capabilities, web actions, and expanded productivity tools for a more integrated assistant experience.

The Details:

Persistent memory allows Copilot to build user profiles, retaining context like preferences and daily routines. Users can manage stored data through a dedicated privacy dashboard.

"Actions" let Copilot perform web-based tasks like booking reservations or buying items via browser integration, echoing features in OpenAI’s Operator and Amazon’s Nova Act.

Copilot Vision adds real-time camera input on mobile and can analyze on-screen content on Windows devices.

New productivity tools include Pages, Deep Research, and AI Podcasts to organize notes, conduct complex research, and generate custom audio summaries.

Why it matters: Microsoft is pushing Copilot to become a proactive digital assistant, though most features lean toward consumer use—raising questions on its edge against rivals like Google, OpenAI, and Meta in personal productivity. While Copilot's deeper Windows and Office integration could provide a unique value proposition, broader adoption may hinge on trust in its memory and automation features.

OPENAI DELAYS GPT-5, SURPRISE LAUNCHES COMING

The News: OpenAI is changing the roadmap - rolling out two intermediate models—o3 and o4-mini—before launching its highly anticipated GPT-5, which has now been delayed until later in 2025.

The Details:

o3 is optimized for advanced reasoning, STEM, and coding tasks, and includes a "Pro Mode" for complex problem-solving.

o4-mini provides cost-efficient performance, suitable for lightweight apps and services.

GPT-5 was postponed due to integration challenges and infrastructure scaling to handle anticipated demand (thanks Ghibli style).

GPT-5 is now expected in mid-to-late 2025, possibly with tiered subscription-based access.

Why it matters: OpenAI's roadmap adjustment is more than a strategic shuffle—it's a signal of how the generative AI arms race is evolving. By releasing o3 and o4-mini ahead of GPT-5, OpenAI acknowledges the need for iterative innovation in a space defined by exponential leaps. This staggered rollout buys time to enhance GPT-5’s capabilities while delivering immediate value to developers and users hungry for next-gen features.

Today's Top Tools

🕺 DreamActor-M1 - Animate full bodies from images using holistic control, multi-scale adaptability, and temporal coherence.

🛆 Buy for Me - Amazon AI that shops across the web, including non-Amazon retailers.

🎥 Adobe Premiere Pro - Adds Gen Extend, Media Intelligence for next-gen video editing workflows.

✨ ActionKit - Supercharge agents with 1,000+ integrations.

Quick News

Midjourney V7 introduces voice-enabled Draft mode and better image fidelity.

OpenAI considered buying Sam Altman and Jony Ive’s AI hardware startup for $500M.

Microsoft debuts Muse AI with a playable Quake II browser demo.

A judge denied OpenAI's motion to dismiss NYT's lawsuit, allowing claims of IP misuse to proceed.