Kimi-Researcher Just Topped The World’s Toughest AI Exam

This signals a major shift from passive LLMs to autonomous agents capable of real-world problem-solving with adaptive learning... this is what we've been waiting for.

Good morning AI entrepreneurs & enthusiasts,

Moonshot AI just made a serious statement.

Kimi-Researcher didn’t just beat the competition—it changed the conversation. It passed one of the toughest reasoning exams ever designed with a 26.9% Pass@1 score, using over 200 steps per task to think, plan, and solve like a real expert.

In today’s AI news:

Moonshot AI’s Kimi-Researcher outperforms Gemini on Humanity’s Last Exam

Allegations Against DeepSeek

Anthropic scores a win (and a warning) in AI copyright ruling

Reid Hoffman backs AI-powered brain tech

Top Tools & Quick News

Moonshot AI’s Kimi-Researcher Surpasses Gemini on Humanity’s Last Exam

The News: Kimi-Researcher, the newest agent from Moonshot AI, has achieved a groundbreaking 26.9% Pass@1 score on Humanity’s Last Exam (HLE), decisively outperforming Google’s Gemini and other top-performing AI models.

The Details:

What is HLE? Humanity's Last Exam is a rigorous benchmark designed to test deep, expert-level reasoning and problem-solving across more than 100 academic and professional domains. Nearly 1,000 subject matter experts contributed to its design.

Kimi-Researcher scored 26.9% Pass@1 and 40.17% Pass@4—a massive improvement from its prior 8.6% Pass@1 baseline.

This leap was driven by agentic reinforcement learning (ARL), where Kimi autonomously plans, reasons, and explores. Each task involved over 23 reasoning steps and more than 200 web pages parsed per challenge, as detailed in this AIBASE article.

Unlike other models that depend on static prompts or templates, Kimi learns through trial and error, adapting dynamically to solve each novel question it faces.

Why It Matters: Kimi-Researcher signals a paradigm shift in agentic AI. Rather than merely generating fluent responses, it demonstrates autonomous reasoning at an expert level—the kind of complex cognitive work previously out of reach for LLMs. Surpassing Gemini on such a challenging benchmark places Moonshot AI at the leading edge of real-world problem-solving AI and redefines what "intelligence" looks like in machines.

Allegations Against DeepSeek

The News: DeepSeek, a Chinese AI firm based in Hangzhou, has been accused by U.S. officials of actively supporting China’s military, bypassing U.S. semiconductor export bans using shell companies, and sharing user data with Chinese surveillance networks.

The details:

The company appears over 150 times in Chinese military procurement documents, linking it to PLA research institutions.

DeepSeek reportedly used shell companies in Southeast Asia to acquire Nvidia H100 chips restricted by U.S. export controls, and sought remote access to U.S. chips via data centers.

It’s also accused of routing user data — including from American users — through Chinese infrastructure tied to state-owned China Mobile.

Why it matters: These allegations spotlight the geopolitical complexity of AI development, with major implications for export policy, user data protection, and the global AI arms race. While DeepSeek denies the claims, regulators worldwide are now re-evaluating their stance on Chinese-developed AI products.

Anthropic scores a win (and a warning) in AI copyright ruling

The News: A federal judge just ruled that Anthropic’s AI training on legally bought books qualifies as fair use — but also denied any legal protection for the use of 7 million pirated titles, in a class action lawsuit brought by authors.

The Details:

The judge described AI training as "spectacularly transformative," likening Claude’s learning to that of a writer studying others' work.

Plaintiffs couldn’t show Claude’s outputs resembled original works, undercutting claims of market harm.

However, the company also downloaded millions from pirate sources and retained them permanently — a clear violation of rights, according to the court.

Anthropic now faces a December trial for alleged willful infringement, with potential damages of $150,000 per pirated book.

Why It Matters: This decision offers rare clarity: legally obtained content is fair game for AI training under U.S. law. But relying on pirated data carries staggering legal risk. For AI labs, it's a foundational precedent—a small step toward defining the future of LLM training boundaries, but far from a complete resolution to the industry's copyright battles.

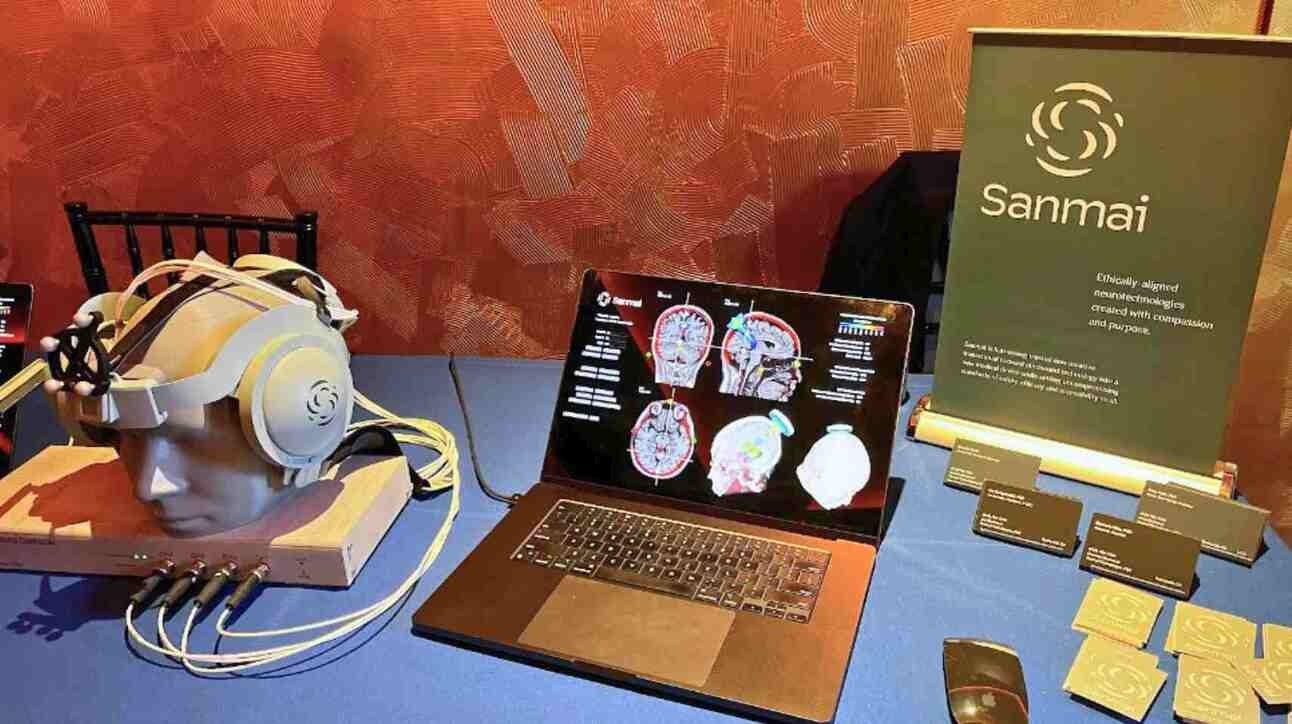

Reid Hoffman backs AI-powered brain tech

The News: Reid Hoffman just led a $12M round into Sanmai Technologies, makers of an AI ultrasound helmet for mental health — non-invasive and under $500.

The details:

Sanmai uses focused ultrasound + AI-driven targeting to stimulate brain areas linked to anxiety and depression.

The helmet is designed for consumer in-home use.

Hoffman joined the board via Aphorism Foundation, calling it a safer alternative to Neuralink.

Clinical trials are underway at Sanmai’s Sunnyvale lab, with FDA applications expected.

Why it matters: As AI meets neurotech, the “soft touch” approach — wearable, non-invasive, AI-guided — could win out over more extreme solutions like BCIs. The next interface might not go in your brain — it might just sit on top of it.

Today's Top Tools:

🗣️ 11ai – ElevenLabs' new voice assistaKimi-Researcher Just Topped The World’s Toughest AI Examnt with MCP integrations

💻 Mu – Microsoft’s fast local AI for Copilot + PCs

🎆 Imagen 4 Ultra – Google's flagship image model, now API-accessible

Quick News:

ChatGPT iOS downloads hit 29.6M in 28 days — nearly overtaking TikTok, Instagram, Facebook combined (32.6M)

Sam Altman called the ‘io’ lawsuit “silly and wrong,” accusing founder Jason Rugolo of pushing for acquisition

Mira Murati’s Thinking Machines Lab to develop profit-focused custom models

Google launches Gemini Robotics On-Device: fast, offline VLA model for robotics

Andy Konwinski (Databricks/Perplexity) launched Laude Institute, pledging $100M for applied CS breakthroughs

XBOW’s autonomous AI beats all humans on HackerOne; raised $75M Series B

Thanks for reading this far! Stay ahead of the curve with my daily AI newsletter—bringing you the latest in AI news, innovation, and leadership every single day, 365 days a year. See you tomorrow for more!

Kimi-Researcher’s step-wise reasoning reminds me of how consultants tackle thorny questions—slow but thorough. I’m keen to know whether end users will accept slower cycles if the output quality jumps, or if speed will still trump depth.

Surpassing Gemini on HLE suggests agentic LLMs are maturing fast, but we’ve seen benchmarks over-index on academic tasks before. What gameplay does Moonshot envision for less structured problems like creative strategy or negotiation?