Inside Gemini 2.5 Flash: Budgeted Reasoning

Google’s Gemini 2.5 Flash introduces on-demand reasoning control—redefining how developers balance performance, precision, and cost in AI systems.

Good morning AI entrepreneurs & enthusiasts,

The era of affordable AI reasoning just got a major upgrade — Google has introduced Gemini 2.5 Flash, now in preview, delivering performance on par with leading models but at a far lower cost.

With a unique toggle that controls when reasoning activates, and a "thinking budget" to optimize for cost, quality, or speed, this might be the model that finally democratizes deep reasoning at scale.

IN TODAY'S AI NEWS:

Google’s Gemini 2.5 Flash with budgeted reasoning

Profluent uncovers scaling laws for protein-design AI

Meta FAIR’s new frontier in AI perception

Top Tools & Quick News

GOOGLE Gemini 2.5 Flash with Budgeted Reasoning

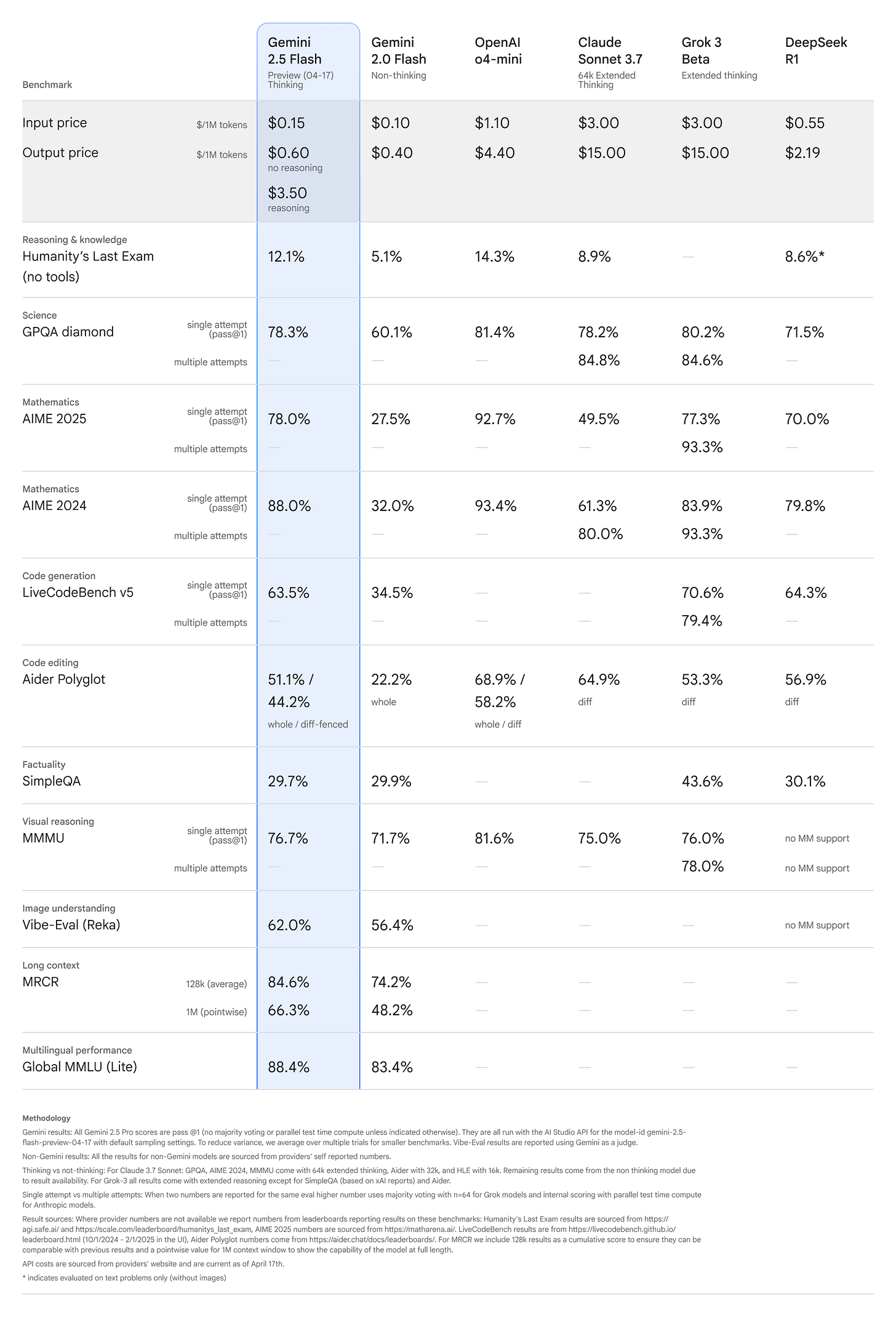

The News: Google has unveiled Gemini 2.5 Flash — a hybrid reasoning model now in preview that rivals o4-mini and surpasses Claude 3.5 Sonnet in STEM and logical benchmarks, while debuting a customizable "thinking budget" feature.

The Details:

A major leap over 2.0 Flash, Gemini 2.5 introduces a toggle to enable or disable advanced reasoning on demand.

It delivers strong results across STEM, multi-step reasoning, and visual understanding, at significantly reduced cost levels.

Developers can set a token cap up to 24,576 to balance speed, accuracy, and budget constraints.

Now available in preview through Google AI Studio and Vertex AI, plus as an experimental toggle in the Gemini app.

Why it matters: While OpenAI's launches dominated headlines, Google is quietly delivering high-utility innovation. This "budgeted reasoning" feature allows developers to invoke deeper compute power only when necessary — paving the way for more flexible, cost-conscious AI implementations in real-world use cases.

Scaling Laws in Protein Design

The News: Profluent introduced ProGen3, a model family that validates AI scaling laws in biology — confirming that more data and larger models produce better protein design outcomes.

The Details:

The 46B-parameter model was trained on 3.4 billion protein sequences—1.5 trillion amino acid tokens.

Antibodies generated by ProGen3 match the effectiveness of FDA-approved drugs but are structurally distinct enough to avoid patent conflicts.

ProGen3 also designed gene-editing proteins that are less than half the size of CRISPR-Cas9, enabling improved delivery options.

20 "OpenAntibodies" are being released via royalty-free or upfront licensing.

Why it matters: This marks a turning point where biology transitions from trial-and-error science to predictable engineering. With scaling laws now validated in protein design, Profluent’s AI-driven models could compress drug discovery timelines and bring new therapies to market faster — especially in underserved disease categories.

META New Perception AI Research from Meta FAIR

The News: Meta’s AI research group has unveiled five new open-source projects targeting perception, 3D understanding, and collaborative reasoning — fundamental for building embodied AI and intelligent agents.

The Details:

Perception Encoder achieves new SOTA in recognizing subtle objects and movements using contrastive vision-language training and intermediate network layer embeddings.

PLM and PLM-VideoBench test real-time comprehension of complex, multimodal videos, crucial for agents operating in dynamic environments.

Locate 3D provides over 130,000 language-annotated 3D scenes to ground perception in natural language for more capable robots.

Collaborative Reasoner shows 30% performance improvement when agents reason collaboratively, powered by synthetic multi-agent training data.

Why it matters: These foundational models and datasets elevate the intelligence of machines that see, understand, and interact with their environment. Meta’s open-source approach accelerates embodied AI research and paves the way for more collaborative, socially aware intelligent systems.

TODAY'S TOP TOOLS:

Gamma 2.0: AI-crafted presentations and websites from prompts — Gamma is a real-time, collaborative design tool that turns outlines and prompts into visually stunning presentations and websites.

o3 and o4-mini: OpenAI’s latest reasoning and agentic models, capable of using tools like web browsing, file handling, and visual inputs. o4-mini is the fast, lightweight version, while o3 is optimized for complex reasoning.

Codex CLI: An open-source terminal agent for developers, allowing AI-assisted coding directly from the command line. Built by OpenAI for local use.

Copilot for GUIs: Microsoft’s experimental "computer use" feature lets agents control apps via GUI automation — early access now live via Copilot Studio.

QUICK NEWS:

OpenAI’s o3 scored 136 on Mensa Norway’s IQ test, topping Gemini 2.5 Pro’s reported score of 133.

Chatbot Arena (UC Berkeley) spins off into standalone company LMArena.

xAI’s Grok gains memory and Workspaces tab, offering personalized recall and chat organization.

Alibaba drops Wan 2.1-FLF2V-14B with frame-to-frame image input synthesis.

Deezer flags over 20K AI-generated songs per day, now 18% of all uploads.

OpenAI was in talks to acquire Anysphere before current Windsurf bid for $3B.

Budgeting reasoning... brilliantly simple yet brilliantly effective.

Gem 2.5 ain't got nothing on o3