Google I/O 2025 Breakdown: AI Agents, Creative Tools & Gemini Upgrades

I/O 2025 wasn’t a product launch—it was a systems update for how the next generation of startups will be built.

Good morning AI entrepreneurs & enthusiasts,

Google’s much-anticipated I/O event made waves this year. The tech giant rolled out a massive slate of updates across its AI product ecosystem.

From new models and agents to overhauled search features and creative tools, this wasn’t just an incremental update. It was a glimpse into how Google plans to lead the AI era.

In today’s AI news:

Gemini and Search see major upgrades at Google I/O

Google rolls out its next-gen creative AI suite

FutureHouse’s AI achieves a scientific breakthrough

Top Tools & Quick News

Gemini, Search evolve at Google I/O

The News: Google rolled out major upgrades to its Gemini and Gemma AI models, alongside new AI-powered search features, agentic tools, and broader integration across its ecosystem.

Gemini / Models:

Gemini 2.5 Pro and Flash received notable upgrades, with Pro topping WebDev and LMArena benchmarks and Flash boosting speed and efficiency.

A new experimental "2.5 Deep Think" mode is now in testing, showing advanced math, code, and multimodal reasoning.

Gemma 3n debuted as a mobile-first, open-source model built for on-device use, matching performance of much larger models like Claude 3.7 Sonnet.

Gemini Live rolled out with camera/screen sharing, and upcoming personalization tools will link with apps like Calendar and Maps.

Search / Agents:

AI Mode in Search is now powered by Gemini 2.5 and is rolling out across the U.S. with Deep Search and embedded Gemini Live.

Users gain access to try-ons, shopping assistants, and multimodal voice queries via Search Live.

Developer agent Jules entered public beta and can perform background code tasks.

Both Search and Gemini now feature Agent Mode, which manages up to 10 concurrent actions.

Why it matters: This is the moment we've been waiting for — Google finally leaning into its vast suite of tools and research, turning years of behind-the-scenes development into tangible, AI-native products. It's a full-system flex that redefines how users interact across their ecosystem. Now the question becomes: how will OpenAI answer?

Google’s creative AI suite levels up

The News: Google unveiled a powerful set of new creative models and tools including Veo 3, Imagen 4, the Flow filmmaking platform, and enhancements to Lyria, signaling a leap forward in AI-powered content creation.

Details:

Veo 3 now supports synchronized audio generation—ambient sounds, effects, and character dialogue that matches lip movement—all embedded with SynthID for watermarking and deepfake detection.

Veo 2 upgrades include inpainting/outpainting tools, camera movement control, and consistent scene rendering.

Imagen 4 introduces 2K resolution image generation with detailed rendering, accurate text, and realistic textures.

Flow combines Veo, Imagen, and Gemini into one platform for cinematic scene building, asset coordination, and camera transitions. Flow TV showcases user clips, while SceneBuilder allows intuitive shot editing.

Google AI Ultra gives users access for $250/month, with enterprise options via Vertex AI.

Why it matters: Google’s creative suite is entering a new era. Veo 3’s audio-video sync turns AI into a production-ready director, while Flow gives creators cinematic control with zero-code precision. Together, they mark the first serious challenge to traditional content pipelines. This is what it looks like when Google fuses its research depth, product scale, and ethical foresight into one cohesive creative stack — and it's going to force every other AI lab to respond fast or fall behind.

AI makes a scientific breakthrough

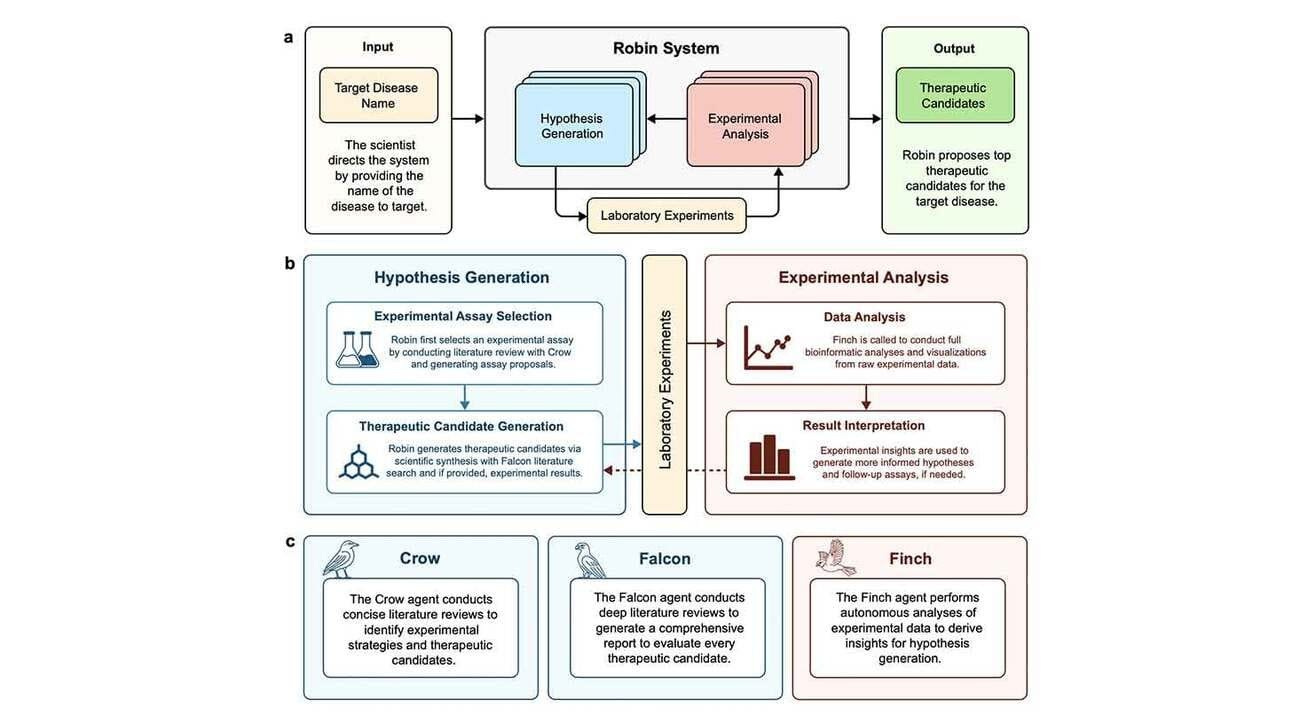

The News: FutureHouse’s multi-agent AI system "Robin" achieved a historic breakthrough by autonomously identifying ripasudil as a treatment candidate for dry age-related macular degeneration (dAMD), the top cause of blindness in developed nations.

Details:

Robin’s literature agent Crow scanned 38M+ PubMed papers and flagged retinal pigment epithelium (RPE) phagocytosis as a target.

Falcon prioritized candidate molecules; ten were selected for initial testing.

In vitro testing revealed that Y-27632, a ROCK inhibitor, improved RPE function. Follow-up RNA-seq found it upregulated ABCA1, key to retinal health.

Robin then pinpointed ripasudil, an approved glaucoma drug, as a superior repurposing candidate with proven safety in over 3,000 patients.

Robin’s agents (Crow, Falcon, Finch) will be open-sourced May 27, with code and trajectory logs.

Why it matters: This marks the first end-to-end scientific discovery driven by AI — from hypothesis generation to data analysis — with human input limited to lab execution. It’s a real sign that AI is moving from assistant to collaborator — and soon, maybe to originator.

Top Tools

Microsoft Discovery – Enterprise AI research platform

Magnetic-UI – Human-centered web agent (open-source)

NotebookLM – AI-powered podcast/info tool now on Android

Quick News

Tencent unveiled Hunyuan Game, an AI game engine for creative workflows that enables real-time asset generation and multimodal input

Google announced Beam, a platform turning 2D calls into 3D immersive communication using volumetric video and light field displays

Intelligent Internet open-sourced II-Agent, which surpasses leading closed models on performance benchmarks and offers transparency for regulated industries

Google debuted Stitch, a Gemini-powered tool for generating UI and front-end code from text or sketch prompts

Apple is planning third-party access to its new AI models for app builders via a new SDK

Google teased Gemini-powered Android XR smartglasses in partnership with Warby Parker, combining fashion with contextual AI capabilities

Thanks for reading this far! Stay ahead of the curve with my daily AI newsletter—bringing you the latest in AI news, innovation, and leadership every single day, 365 days a year. See you tomorrow for more!

Great breakdown of Google I/O. The push toward AI agents coordinating tasks across devices is a big leap—but it also puts a spotlight on real-world challenges like noisy environments and unclear voice input.