BREAKING NEWS: OpenAI has achieved AGI.

BREAKING NEWS: OpenAI has achieved AGI. I never thought this day would come... Oh, you thought I meant Artificial General Intelligence? No. Just Ads Generated Income.

Good morning AI entrepreneurs & enthusiasts,

The ad-free era of AI assistants just ended. OpenAI announced it will begin testing targeted ads in ChatGPT — and CFO Sarah Friar’s simultaneous disclosure of $20B revenue (with a $17B burn rate) tells you exactly why. When your infrastructure costs grow 10x and your revenue only keeps pace, you pull every monetization lever available.

Sam Altman called ads for ChatGPT “a last resort” in 2024, so are we there yet?

In today’s news:

OpenAI officially bringing ads to ChatGPT

OpenAI hits $20B in revenue as compute scales nearly 10x

xAI activates Colossus 2: the world’s first gigawatt AI training cluster

Wikimedia announces historic AI partnerships with Big Tech

Today’s Top Tools + Quick News

📢 OpenAI Officially Bringing Ads to ChatGPT

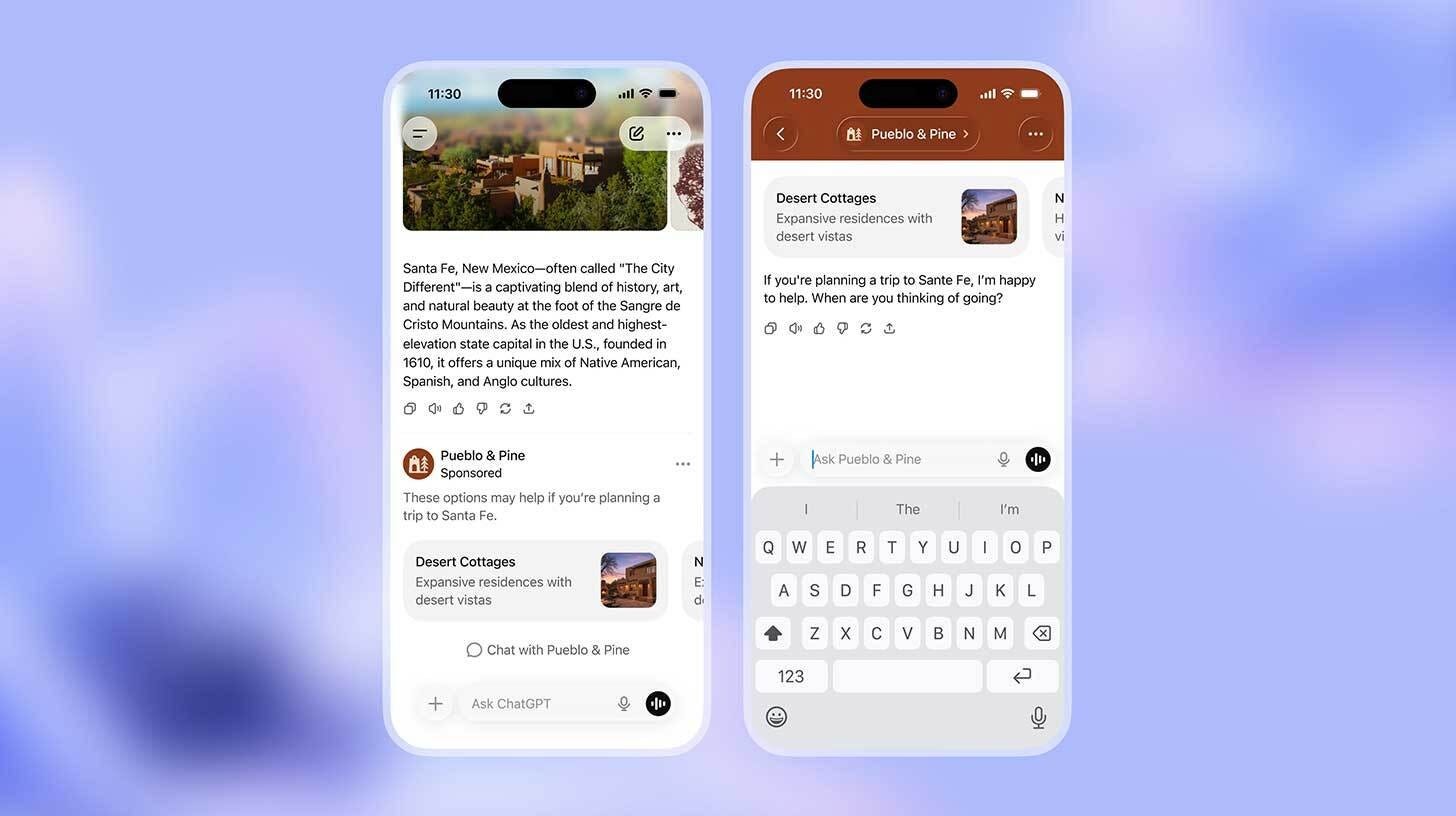

News: OpenAI just announced it will begin testing targeted advertisements in ChatGPT for free and Go tier users in the U.S. This puts into motion a major monetization shift for the AI giant as it eyes a late-2026 IPO.

Details:

Ads will surface below responses as “Sponsored Recommendations,” targeted based on conversations but excluded from health, politics, and underage users

The move coincides with the company’s $8/month ChatGPT Go tier launching globally, with ads included to offset the lower price point

Premium tiers (Plus, Pro, Business, Enterprise) remain ad-free, with OpenAI pledging to “never sell user data” or let ads influence ChatGPT’s answers

Altman had stated in 2024 that ads would be a “last resort,” but more recently said he “wasn’t totally against it” if it didn’t violate user trust

Why it matters: The math tells the story: $20B ARR, $17B burn, IPO in late 2026. OpenAI needs every revenue stream firing. But this is a slippery slope. If one giant rolls out ad monetization, others will follow — nobody leaves money on the table. Model providers have unprecedented access to personal information, and leveraging that for personalized ads within chat crosses a line. I’m happy to pay extra to avoid ads — but it’s the sheer principle. Sam Altman once called this a ‘last resort’ ... that’s the signal: Is OpenAI pulling any lever they can pre-IPO?

💰 OpenAI Hits $20B in Revenue as Compute Scales Nearly 10x

News: OpenAI CFO Sarah Friar published a blog post revealing the company has crossed $20 billion in annualized revenue for 2025, up from just $2 billion in 2023 — a tenfold increase in two years. The disclosure, released alongside the ads announcement, makes the case that OpenAI’s aggressive infrastructure spending is producing proportional financial returns.

Details:

Compute grew 3X year over year or 9.5X from 2023 to 2025: 0.2 GW in 2023, 0.6 GW in 2024, and ~1.9 GW in 2025

Revenue followed the same curve growing 3X year over year, or 10X from 2023 to 2025: $2B ARR in 2023, $6B in 2024, and $20B+ in 2025

“This is never-before-seen growth at such scale,” CFO Sarah Friar said. “We firmly believe that more compute in these periods would have led to faster customer adoption and monetization.”

OpenAI’s annual burn rate has hit $17 billion, largely consumed by compute infrastructure, raising questions about sustainability ahead of the expected IPO

Looking ahead, OpenAI expects additional economic models, including licensing, IP-based agreements, and outcome-based pricing

Why it matters: Friar’s blog post is a calculated move to reassure investors ahead of OpenAI’s expected IPO filing. “Our ability to serve customers — as measured by revenue — directly tracks available compute,” she wrote, essentially arguing that every dollar spent on infrastructure produces a dollar of revenue. But the subtext is clear: OpenAI is burning $17 billion annually while revenue hits $20 billion — that’s not a sustainable margin. When your infrastructure provider needs to 10x revenue just to keep pace with 10x compute costs, expect pricing and policy changes that prioritize their economics over yours (hmm, like ads!)

⚡ xAI Activates Colossus 2: The World’s First Gigawatt AI Training Cluster

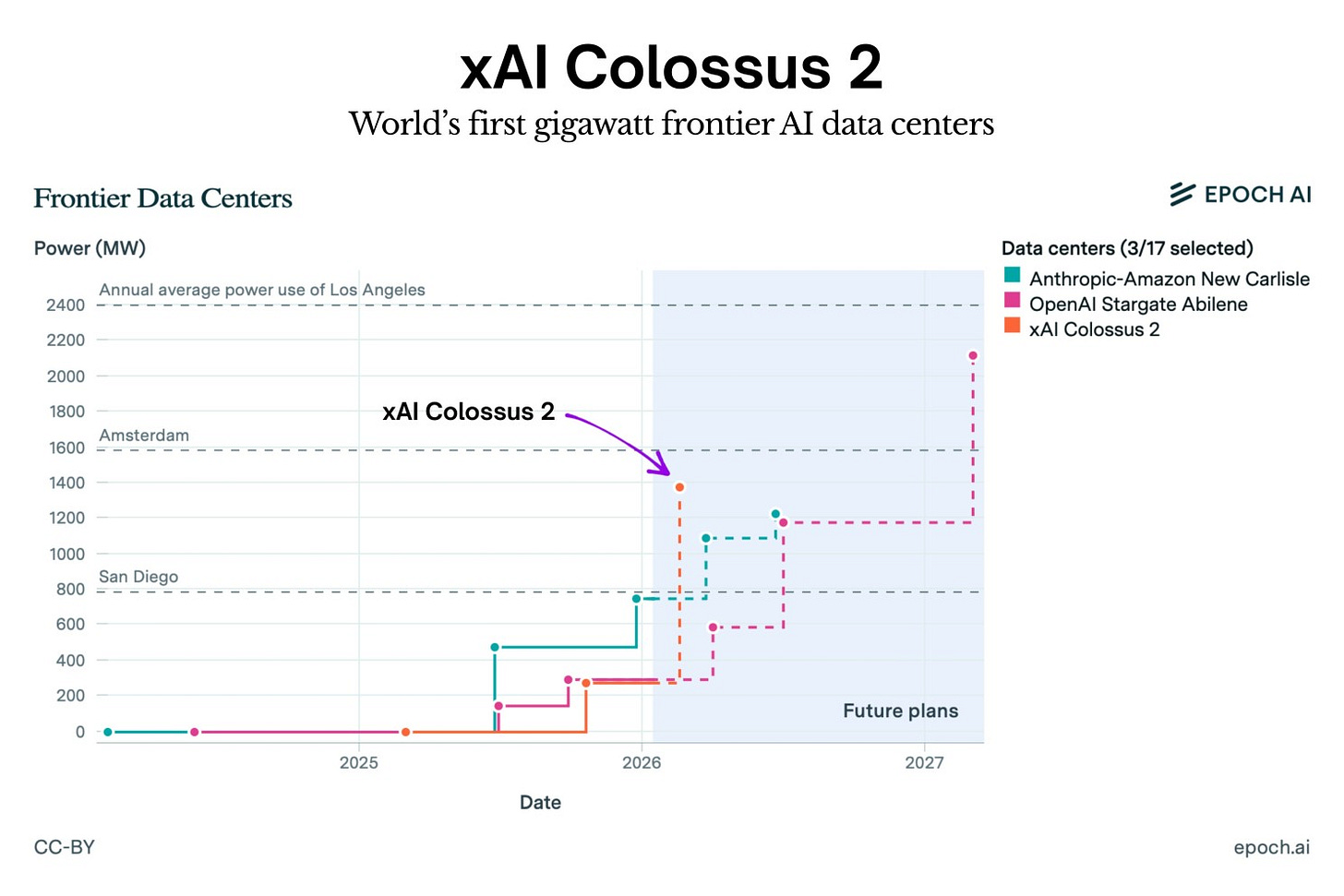

News: Elon Musk announced that xAI’s Colossus 2 supercomputer is now fully operational, making it the first AI training cluster in history to reach gigawatt-scale power consumption. The Memphis-based facility, dedicated to training the Grok model, draws more electricity than the peak demand of San Francisco — and it’s set to grow even larger by spring.

Details:

In a post on X, Musk stated that Colossus 2 is already operational, making it the first gigawatt training cluster in the world, with upgrades to 1.5 GW of power planned for April

The facility will house 555,000 NVIDIA GPUs purchased for approximately $18 billion — making it the world’s largest single-site AI training installation

Colossus 1 went from site preparation to full operation in 122 days — NVIDIA CEO Jensen Huang called the buildout “superhuman,” noting it was operational in 19 days versus the typical 4-year timeline

xAI’s Colossus 2 launch follows the company’s recently closed $20 billion Series E funding round, which exceeded its initial $15 billion target

xAI builds its own power generation on-site rather than waiting for utility interconnection, including a gas-fired power plant adjacent to the data center — 2 GW total load is equivalent to powering roughly 1.5 million homes

Why it matters: While competitors are still drafting roadmaps, xAI is operating at major city-level power today. OpenAI’s Stargate and Anthropic’s AWS buildout remain in planning or partial deployment — xAI is the only player operating at gigawatt scale today. xAI has effectively reset the pace of the AI infrastructure race, proving that vertical integration and breakneck execution can compress what typically takes years into weeks. For AI startups: compute is becoming the new oil, and vertical integration is the moat. If you can’t build your own power plant, your strategy needs to account for who can.

📚 Wikimedia Announces Historic AI Partnerships with Big Tech

News: The Wikimedia Foundation has publicly revealed new partnerships with Amazon, Meta, Microsoft, Mistral AI, and Perplexity, formalizing paid access to Wikipedia’s 65 million articles across 300+ languages through its Wikimedia Enterprise platform. Announced alongside Wikipedia’s 25th anniversary, these deals mark a fundamental shift in how the world’s largest nonprofit encyclopedia sustains itself in the age of generative AI.

Details:

In addition to the previously announced partnership with Google in 2022, the organization shared publicly for the first time that it has formed other partnerships with Amazon, Meta, Microsoft, Mistral AI, and Perplexity over the past year

Global audiences view more than 65 million articles in over 300 languages nearly 15 billion times every month, and its knowledge powers generative AI chatbots, search engines, voice assistants, and more

Instead of requiring companies to parse HTML like traditional web scrapers, Wikimedia Enterprise provides pre-formatted, structured datasets optimized for training pipelines — reducing processing time from hours to minutes and including metadata about article reliability, edit history, and citation completeness

Wikipedia’s human page views fell 8% year-over-year as of October 2025, as AI chatbots answer queries using Wikipedia content without directing users to the site

Why it matters: For years, tech companies treated Wikipedia as an unlimited free resource while building billion-dollar AI products. “They’ve been absolutely hammering our servers,” said Jimmy Wales. These partnerships represent more than revenue diversification — they’re establishing a new precedent for the AI data economy. If your models were trained on scraped Wikipedia content, take note: the era of free training data is closing fast.

TODAY’S TOP TOOLS

💼 Claude Cowork — Claude Code’s agentic abilities, now available to Pro tier

⚙️ Replit — New capabilities to easily build and deploy mobile apps

📸 FLUX.2 Klein — BFL’s new ultra-fast, powerful AI image editing model

This is hilarious

Strong framing on the AGI pun, but the revenue-to-burn math is the real story. $20B ARR with $17B burn means OpenAI's net margin is basically 15%, which sounds healthy untill you realize compute costs are scaling faster than pricing power can catch up, forcing monetization experiments like ads that risk degrading the core product. I dunno if this is really about IPO optics or genuine unit economics pressure, but either way it signals the entire LLM provider space is gonna face similar margin compresion. Saw this pattern in early cloud infrastructure where the first mover hits capacity constraints and has to choose between quality or profitability.