AI Week in Review: 10x Code Meets Infinite Context — The Next Leap in Intelligence

I’ve been reporting on AI for over 540 days. This week stood out - not for what launched, but for what shifted: how we define capability, creation, and intelligence itself.

This week, the boundaries of intelligence shifted again. Cognition and MIT tore through AI’s performance ceilings—Cognition with 10x faster code generation, MIT with recursive models that stretch context to infinity. For the first time, the smartest minds in the field formalized what AGI really means, while Anthropic doubled down with Haiku 4.5 and Skills—blending speed, safety, and collaboration into one coherent system.

But the cultural current moved just as fast. Google’s Veo 3.1 arrived quietly, Runway built the scaffolding for a new creative class, and Mark Cuban hacked virality itself—turning likeness into self-propagating media. AI now writes half the internet, yet human words still carry more trust—for now.

If you want the full deep dive → check out my LinkedIn post:

COGNITION ⚡ SWE-grep enables 10x faster code retrieval

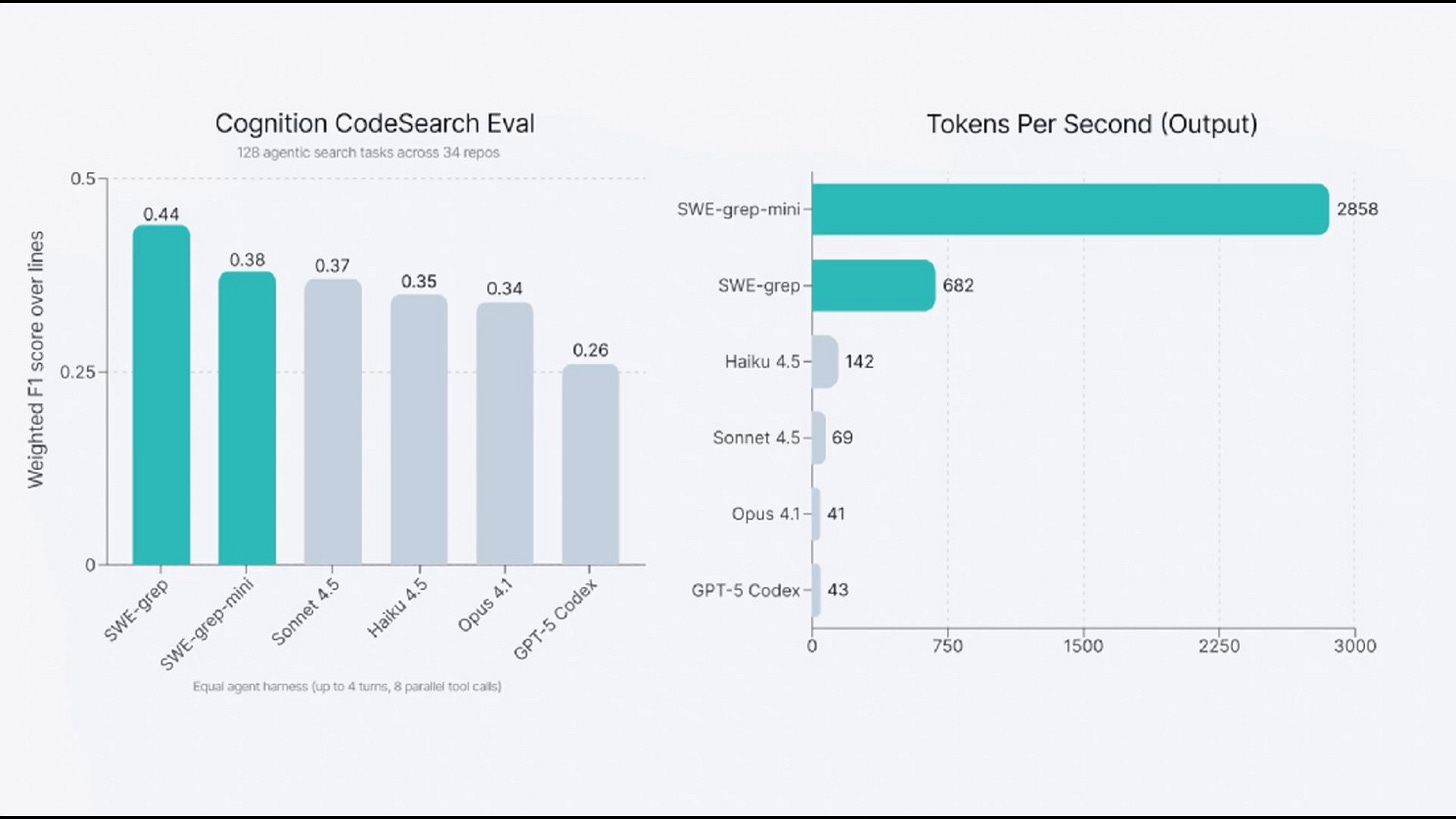

News: Cognition has unveiled a new family of models, SWE-grep and SWE-grep-mini, delivering massively accelerated codebase search for AI-powered coding agents.

Details:

Trained with multi-turn reinforcement learning and capable of 8 parallel tool calls per turn

Matches or outperforms top coding models while retrieving relevant code in as few as 4 turns

SWE-grep-mini achieves over 2,800 tokens/second vs. ~43 for GPT-5 Codex .

Integrated into Windsurf as the Fast Context subagent, enabling low-latency, flow-preserving search across million-line codebases

Prioritizes safety with sandboxed tool use (e.g., grep, read, glob)

Why it matters: By drastically reducing search time without compromising accuracy, it makes AI coding assistants feel real-time, safe, and deeply context-aware. It’s a major step toward seamless, intelligent dev workflows.

🧠 MIT Recursive Language Models (RLMs): Breaking the Context Barrier

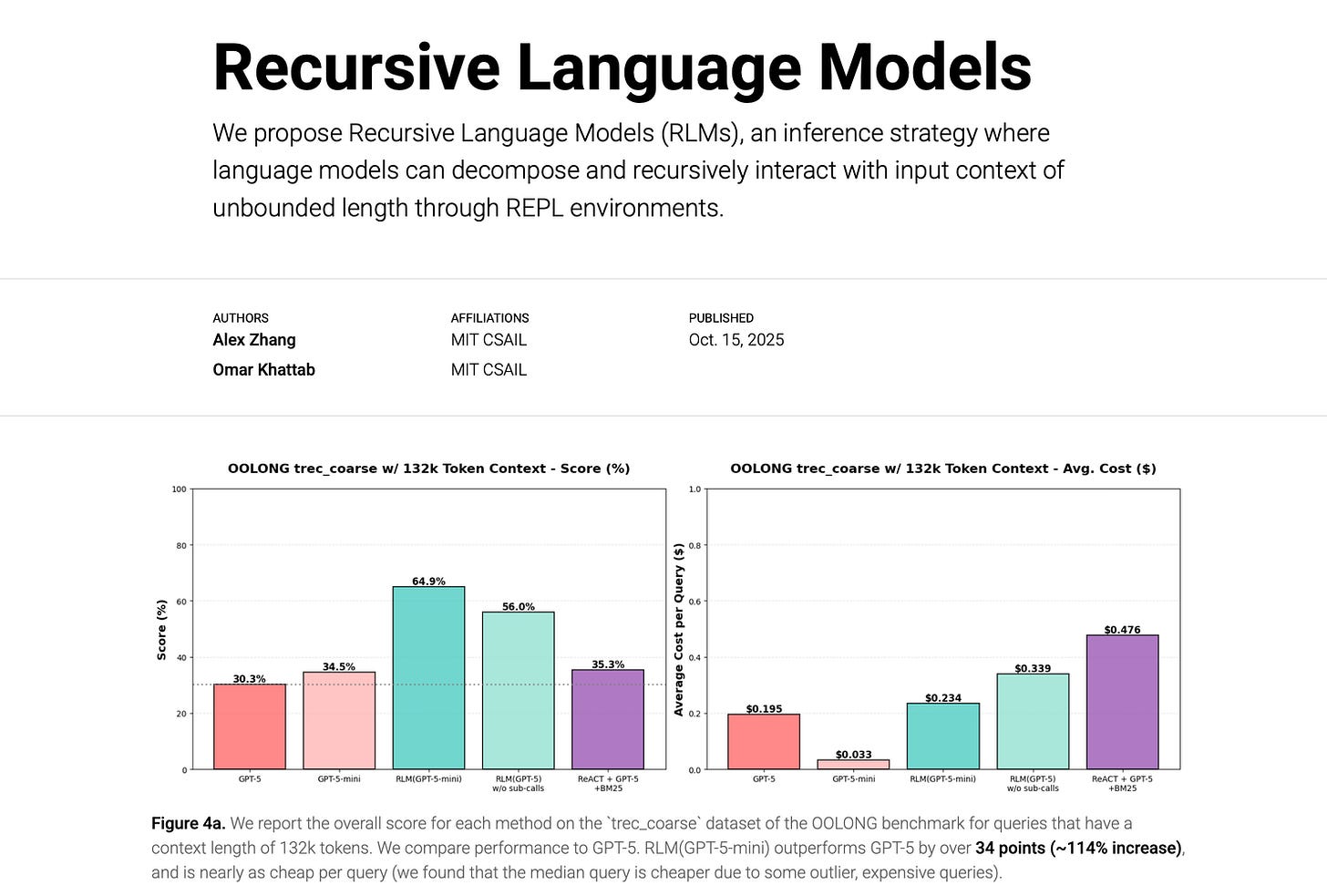

News: MIT has introduced Recursive Language Models (RLMs), a new inference approach enabling models to process extremely long contexts by recursively calling themselves or other models, effectively surpassing the fixed context limits seen in transformer architectures like GPT-5.

Details:

RLMs handle unbounded input lengths by chunking and recursively reprocessing context windows, mitigating performance degradation known as “context rot.”

In benchmark tests, GPT-5-mini RLM achieved 110%+ gains over base GPT-5 across tasks involving over 132,000 tokens, demonstrating scalable long-form reasoning.

The recursive mechanism sustains high accuracy over 1,000+ documents and millions of tokens without additional fine-tuning or model adjustments, as detailed in MIT’s thesis paper.

RLMs outperform retrieval-augmented baselines, signaling a shift toward deeper, context-aware inference for book-length reasoning and complex multi-document tasks.

Why It Matters: This MIT innovation breaks the traditional context window barrier, unlocking new frontiers for long-context AI. Recursive modeling enables continuous coherence over vast inputs—critical for legal research, academic summarization, and autonomous scientific discovery. By making deep, persistent reasoning scalable and cost-efficient, RLMs set a new foundation for models that think across millions of tokens with enduring context and precision.

🕹️ New Scientific Benchmark for Defining AGI

News: A 2025 paper titled “A Definition of AGI”—authored by a powerhouse team including Dan Hendrycks, Dawn Song, Erik Brynjolfsson, and others—lays out the most rigorous framework to date for defining Artificial General Intelligence (AGI).

Details:

Rooted in the Cattell-Horn-Carroll (CHC) theory of cognitive abilities, borrowed from human psychometrics

Evaluates AGI on a wide spectrum: reasoning, problem-solving, learning, memory, transfer of knowledge

Cognitive tests span strata like short-term memory, fluid reasoning, and visual/auditory processing

Benchmarks based on human population norms, not raw metrics

No requirement for biological embodiment—substrate-agnostic performance is the bar

Why it matters: This paper introduces scientific standards for evaluating AGI, not hype. The cross-institutional authorship (UC Berkeley, Center for AI Safety, Google Research, University of Michigan, University of Oxford, MIT, Stanford, University of Montreal) — signals a growing consensus on what AGI actually means. It’s a pivotal step toward building, measuring, and governing general intelligence systems.

🚀 ANTHROPIC Claude Haiku 4.5: Small Model, Big Value

News: Anthropic released Claude Haiku 4.5, a lightning-fast, cost-efficient small model designed to rival its May flagship, Claude Sonnet 4. The update leverages Anthropic’s Petri safety tooling and multi-model orchestration frameworks to deliver major efficiency gains.

Details:

Matches Sonnet 4’s coding performance at just $1 per million tokens (one-third the cost), signaling an architectural leap in compute efficiency.

Outperforms Sonnet 4 in math, computing, and agentic tool use, benefitting from streamlined reasoning pipelines.

Supports parallel agents, enabling coordinated multi-agent workflows that push forward the frontier of autonomous system cooperation.

Available to all Claude users (free and paid tiers) and fully API-ready for integration.

Why It Matters: Anthropic’s pricing strategy—at $1 per million tokens—undercuts both OpenAI’s GPT-4o-mini and Mistral’s Small 24B, accelerating the commoditization of intelligence. As the cost of reasoning collapses, models like Haiku 4.5 democratize access to high-performance AI for developers, startups, and enterprises alike.

🎬 GOOGLE releases Veo 3.1 video model

News: Google has launched Veo 3.1, an upgraded AI video model designed for professional filmmakers. The release highlights Google’s push toward precision control and production-grade reliability in AI-generated video.

Details:

Character continuity: Uses up to three reference images to maintain consistent faces, body types, and wardrobe across scenes.

Framing control: Enables precise start and end frame adjustments for smoother transitions.

Audio-matched transitions: Synchronizes visuals to soundtrack rhythm for natural pacing.

Extended runtime: Generates up to one-minute coherent scenes through continuous clip stacking.

Unified rollout: Integrated across Google Flow, Vertex AI, and Gemini, giving access through both APIs and creative tools.

Why It Matters: Veo 3.1 marks Google’s pivot toward professional filmmakers, offering studio-level control over continuity and timing. While OpenAI’s Sora 2 dominates consumer buzz, Google is playing the long game - building dependable creative infrastructure rather than chasing virality. The result: a steady advance toward true AI-assisted film production.

SORA HACK 📺 Mark Cuban turns himself into a viral AI ad

News: Mark Cuban now allows Sora users to generate AI videos with his likeness... BUT every single one must promote his pharma company, Cost Plus Drugs.

Details:

Every Cuban-based Sora video is auto-configured to feature a Cost Plus Drugs plug—visually, via audio taglines, or within the script.

Cuban set his cameo preferences to enforce embedded branding like “Brought to you by Cost Plus Drugs.”

The campaign has gone viral, turning user-generated content into a swarm of personalized pharma ads.

Why it matters: It’s a clever flip on influencer marketing. Instead of paying for reach, Cuban made himself into a monetizable filter—spreading brand awareness at scale while reinforcing his mission to reduce drug prices.

Thanks for reading this far! Stay ahead of the curve with my daily AI newsletter—bringing you the latest in AI news, innovation, and leadership every single day, 365 days a year. See you tomorrow for more!

Wow, the Cognition SWE-grep speeds really jumped out at me; makes you wonder if our dev teams will even remeber how to grep manually soon. It's a huge leap, but how do you see the sandbox limitations scaling with truly complex refactoring tasks?