$80B Lesson: Oracle's CapEx Warning Defines the New AI Profitability Bar

Oracle's CapEx warning is signaling a structural shift: the market is now demanding cash flow discipline and utilization over raw GPU backlogs.

Good morning AI entrepreneurs & enthusiasts,

The era of “blind AI optimism” has officially met its first major resistance. Last week, Oracle became the epicenter of a massive market recalibration, proving that even the most aggressive AI backlog can’t shield a company from the harsh realities of physical infrastructure and capital discipline.

In today’s AI news:

Oracle’s AI Reality Check: The cost and constraints of global ambition

Runway Unveils GWM-1: The dawn of interactive general world models

Zoom’s Orchestration Blueprint: A new SOTA on the reasoning frontier

Google unlocks real-time audio translation for all headphones

Today’s Top Tools + Quick News

📉 “Oracle Shock”: The AI Reality Check

News: Oracle’s recent fiscal Q2 earnings triggered a massive $80 billion market cap evaporation, signaling a fundamental shift in how the street values AI infrastructure players. The selloff was a “perfect storm” of massive capital expenditure hikes—climbing to a projected $50 billion for the year—coupled with disappointing revenue execution and a flip into negative free cash flow.

Details:

Oracle stunned analysts by disclosing $12 billion in quarterly capex—tripled from the previous year—diverging sharply from the $8 billion Wall Street expected.

Critical reports emerged that some of the dedicated multi-gigawatt campuses for OpenAI’s “Stargate” project have been pushed from 2027 to 2028 due to labor and material shortages.

Despite a massive Remaining Performance Obligation (RPO), the “world of atoms” (power, transformers, and specialized networking) is creating a flatter revenue recognition curve than investors initially modeled.

The “Oracle Shock” rippled through the entire AI stack, pulling down heavyweights like Nvidia, AMD, and Broadcom as the market re-evaluated the ROI of credit-fueled GPU build-outs.

Why It Matters: Oracle has been the bellwether for the “infrastructure leg” of this cycle, and their struggle to balance unprecedented capex with physical construction timelines proves that the path to AGI is capital-intensive and lumpy, not a smooth S-curve. Behind the scenes, we’re seeing a shift where the biggest companies must now prove utilization and cash flow discipline rather than just touting massive backlogs. For the first time in this cycle, the market is signaling that it cares more about when a data center turns on than how many GPUs are inside it.

🌍 Pixels as Physics: Runway’s GWM-1 World Model Unlocks the AGI Simulation Layer

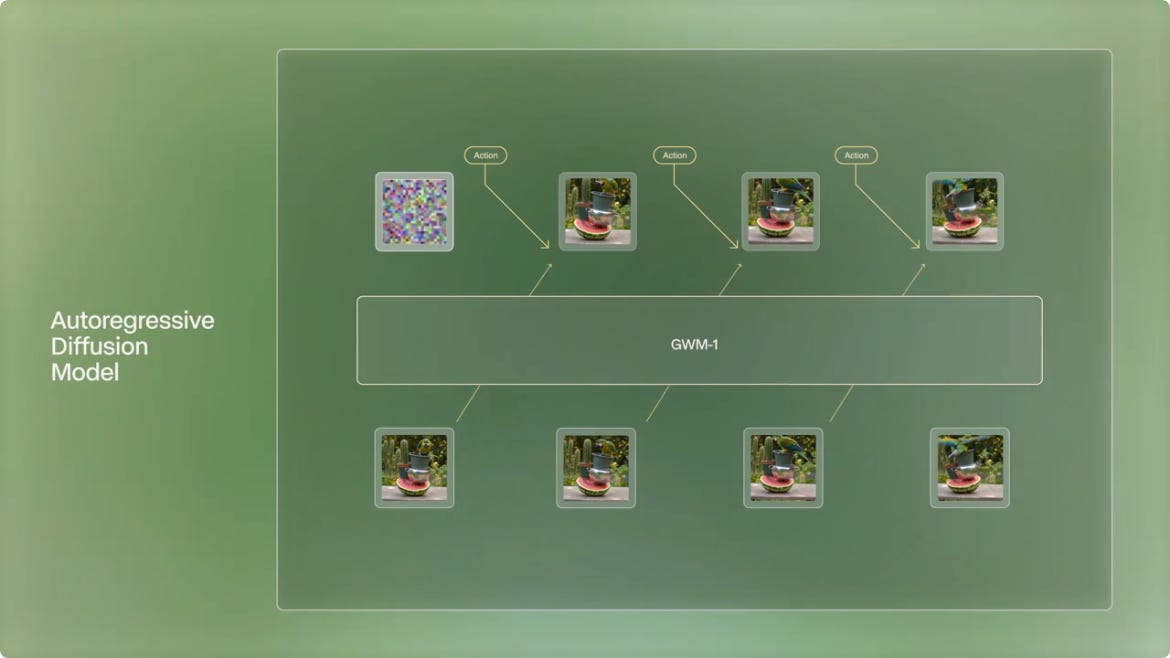

News: Runway has released GWM-1, a general-purpose world model built on the Gen-4.5 architecture that uses pixel prediction to simulate how environments evolve over time. Unlike standard video generators, GWM-1 is action-conditioned, meaning users can influence the simulation in real time—essentially turning video generation into an interactive, physics-aware “world engine.”

Details:

GWM-1 operates at 24 FPS at 720p resolution, supporting multi-minute, coherent sequences designed for interactive exploration.

The model allows for steerable generation through camera poses, robotic commands, and audio inputs, where the “world” reacts logically to the user’s choices.

The rollout includes GWM-Worlds (for explorable environments), GWM-Robotics (for synthetic robot policy training), and GWM-Avatars (for dialogue-driven virtual humans).

By training on massive video datasets, the model learns physical regularities—like gravity, lighting, and occlusion—implicitly, rather than through hard-coded math.

Why It Matters: This is a paradigm shift from Generative AI to Simulation AI, positioning Runway as a direct competitor to foundational research labs like Google and OpenAI. By framing a world model as an internal simulator, the technology gains the ability for complex reasoning, planning, and agentic action within digital space. This provides the “digital twin” infrastructure necessary to train robots and autonomous systems in high-fidelity environments before deployment. We are witnessing the birth of an “operating system for reality” that will fundamentally change how we build games, train machines, and interact with the digital world.

🎧 Google adds real-time audio translation to any headphones

News: Google has officially deployed a suite of Gemini-powered translation upgrades, headlined by a beta feature that streams live speech translations to any connected headphones. This move marks a massive strategic shift, democratizing a capability that was previously a “walled garden” feature restricted to their proprietary Pixel Buds hardware.

Details:

The new Gemini 2.5 Flash Native Audio model drastically improves conversational fluidity, instruction following, and latency for live voice agents.

This integration works with any earbuds on Android, supporting over 70 languages while maintaining the speaker’s original tone and cadence.

By leveraging 2.5 Flash’s world knowledge, the system now interprets slang and specific cultural idioms contextually rather than literally.

Google expanded its language practice mode to 20 additional countries, adding streak tracking and real-time pronunciation feedback.

Why It Matters: The “universal translators” of science fiction are no longer a dream; they are a firmware update. By making this tech hardware-agnostic, Google is positioning Gemini as the literal interface for human-to-human interaction. As this technology scales into YouTube and social platforms, we are entering a post-language era where the friction of global communication effectively hits zero.

🤝 Zoom’s Orchestration Blueprint: A New SOTA on the Reasoning Frontier

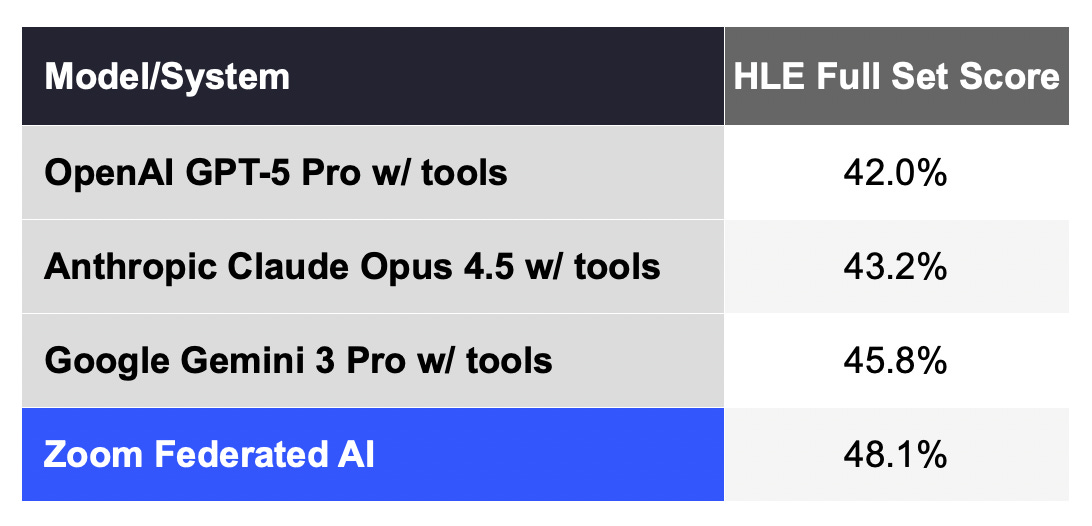

News: While the infrastructure war hits financial bottlenecks, the war for model dominance continues at the application layer. Zoom just shocked the industry by announcing that its ‘federated’ AI system scored 48.1% on “Humanity’s Last Exam.” This result puts the communication giant ahead of several frontier models on one of the most grueling expert-level reasoning tests currently available.

Details:

Zoom’s “Z-scorer” system dynamically orchestrates models from OpenAI, Anthropic, and Google alongside its own specialized small models.

Their score of 48.1% edges out Gemini 3 Pro (45.8%), though it still sits behind the recently released GPT-5.2 at 50%.

This logic will anchor AI Companion 3.0, promising superior summarization and autonomous task execution.

Why It Matters: Zoom is no longer just a video app; they are becoming a powerhouse in AI orchestration. This “federated” approach—mixing the best of all worlds—is the blueprint for the enterprise. It proves that you don’t need to build the biggest model to win; you just need to be the smartest at choosing which model to use for the task at hand.

Today’s Top Tools

Disco - Google’s experimental engine for generating custom web apps on the fly, accelerating rapid prototyping and deployment.

GPT-5.2 - OpenAI’s latest, offering multimodal logic for complex reasoning and agentic workflows, pushing the frontier of AGI capability.

Cursor - The visual editor redefining dev cycles, merging drag-and-drop with AI agents for accelerated prototyping and codebase mastery.

Quick News

xAI is partnering with the government of El Salvador to deploy Grok-powered education systems across the country’s national school curriculum.

Adobe has integrated Photoshop and Express directly into ChatGPT, allowing users to perform complex image edits via simple natural language.

OpenAI is eliminating the 6-month wait for employee stock compensation, a clear signal that the war for top-tier AI talent is reaching a boiling point.

Google promoted Amin Vahdat to Chief Technologist to oversee AI infrastructure, streamlining the reporting line directly to Sundar Pichai.

LOve this line: Despite a massive Remaining Performance Obligation (RPO), the “world of atoms” (power, transformers, and specialized networking) is creating a flatter revenue recognition curve than investors initially modeled.